In this work, we introduce a novel groundings guided video-to-video translation framework called Ground-A-Video. Recent endeavors in video editing have showcased promising results in single-attribute editing or style transfer tasks, either by training T2V models on text-video data or adopting training-free methods. However, when confronted with the complexities of multi-attribute editing scenarios, they exhibit shortcomings such as omitting or overlooking intended attribute changes, modifying the wrong elements of the input video, and failing to preserve regions of the input video that should remain intact. Ground-A-Video attains temporally consistent multi-attribute editing of input videos in a training-free manner without aforementioned shortcomings. Central to our method is the introduction of Cross-Frame Gated Attention which incorporates groundings information into the latent representations in a temporally consistent fashion, along with Modulated Cross-Attention and Optical flow guided inverted latents smoothing. Extensive experiments and applications demonstrate that Ground-A-Video's zero-shot capacity outperforms other baseline methods in terms of edit-accuracy and frame consistency.

|

Input Video

|

Tune A Video w/ ControlNet

|

Control A Video

|

|---|---|---|

|

ControlVideo

|

Gen-1

|

Ground A Video (ours)

|

|

Input Video

|

Tune A Video w/ ControlNet

|

Control A Video

|

|---|---|---|

|

ControlVideo

|

Gen-1

|

Ground A Video (ours)

|

|

Input Video

|

Tune A Video w/ ControlNet

|

Control A Video

|

|---|---|---|

|

ControlVideo

|

Gen-1

|

Ground A Video (ours)

|

|

Input Video

|

Tune A Video w/ ControlNet

|

Control A Video

|

|---|---|---|

|

ControlVideo

|

Gen-1

|

Ground A Video (ours)

|

|

Input Video

|

Tune A Video w/ ControlNet

|

Control A Video

|

Ground A Video (ours)

|

|---|

|

Input Video

|

Gen-1

|

Control A Video

|

Ground A Video (ours)

|

|---|

Input Video

|

cat → puppy

|

cat → tiger

|

cat → puppy

+ lake |

cat → tiger

+ lawn |

cat → dog

+ on the beach + under the sky |

cat → tiger

+ on the snow + in the mountains |

cat → white puppy

+ on the sand + in the dune |

|---|

Input Video

|

bird → blue bird

|

bird → dragonfly

lake → emerald lake |

bird → crow

lake → desert forest → forest on fire |

Input Video

|

swan → pink swan

bushes → white roses |

swan → blue swan

bushes → snowy bushes |

swan → blue swan

bushes → snowy bushes lake → lagoon |

|---|

Input Video

|

dog → lego dog

|

man → Homer Simpson

|

road → pond

|

road → ice

|

man → Iron Man

dog → sheep |

man → Iron Man

dog → sheep road → lake |

dog → goat

man → farmer road → snow road |

|---|

Input Video

|

+ Ukiyo-e art

|

Input Video

|

+Monet style

|

Input Video

|

+ Cezanne style

|

Input Video

|

+ Starry night style

|

|---|

Input Video

|

+ Chinese painting style

|

+ Van Gogh starry night style

|

+ Van Gogh starry night style

dog, man → tiger, Superman |

+ Van Gogh starry night style

dog, man → cheetah, Superman |

|---|

Input Pose

|

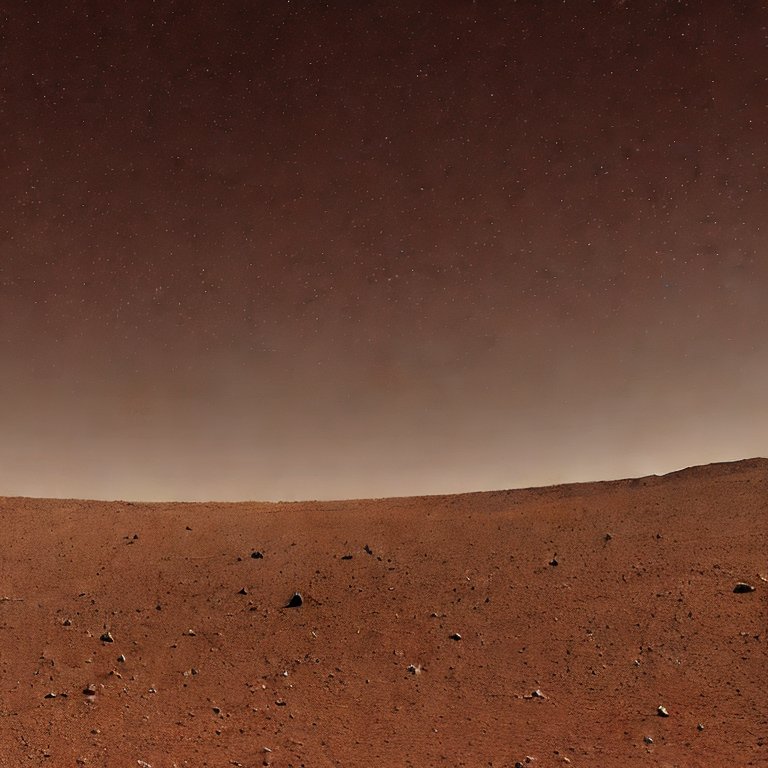

Input Background

|

Stormtrooper is

dancing on Mars. |

Input Pose

|

Input Background

|

Iron man is

dancing on the sand. |

|---|

@article{jeong2023ground,

title={Ground-A-Video: Zero-shot Grounded Video Editing using Text-to-image Diffusion Models},

author={Jeong, Hyeonho and Ye, Jong Chul},

journal={arXiv preprint arXiv:2310.01107},

year={2023}

}